False positives

The last few months I have been working on a Django app that computes (among others) the set of water flows that go into or come out of a water body, also called the water balance. A few weeks ago, one of our customers indicated that the computation contained a bug. After some investigation on my side, it turned out that the computation was correct and that the bug report was a "false positive". Read on for what happened and what I learned from that.

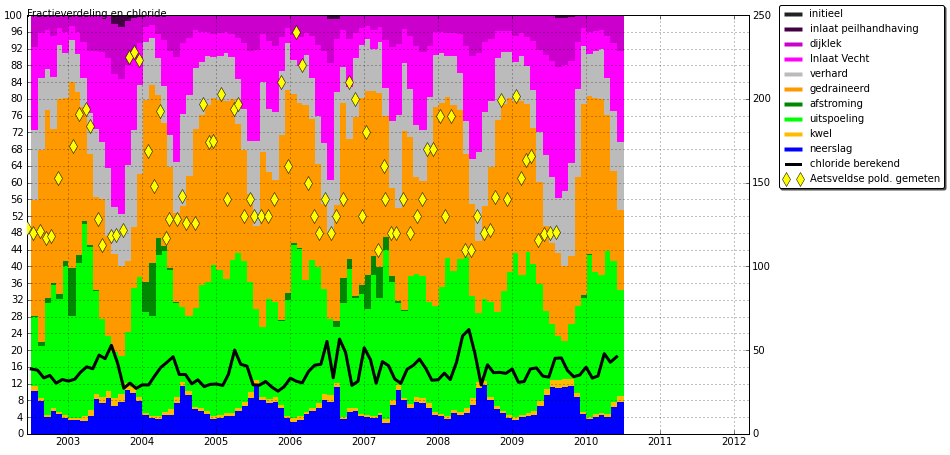

The fraction distribution is a subset of the computed time series that specifies which fraction of the water of the water body comes from which incoming water flow. The picture below, from one of our older websites, depicts such a distribution:

At the start of the first day, we assign the label "initial" to all the water of the water body, so 100% of the water "was initially there". During the day new water comes in, for example due to precipitation and seepage, and already present water goes out, for example due to evaporation. At the end of the day, we might calculate that 80% of the water still present was initially there, that 10% came in due to precipitation and another 10% due to seepage. At each day the percentages should add up to 100. However, our customer reported that the total percentage started at 100% but declined as the days went on.

I knew the code to compute the fractions had worked in the past but I had refactored and changed other parts of the computation engine. And although the refactorings were supported by a big acceptance test, this test probably did not cover every possible input. As the implementation of the "fraction computer" was not supported by unit tests (that is a long story by itself), I decided to look into the implementation first.

A review of the code did not bring any issues to light. I added some debugging statements and ran the code on another test case. That output of intermediate results indicated that the fraction distribution was computed correctly. Maybe the problem was with the export of the time series? So I manually checked whether the totals of the 16 different time series added up to 100% for a specific day and they did.

By now I had the feeling the problem might be at the customers code. We decided to meet and it quickly turned out that he did only not use all the time series that specify the fraction distribution. To be honest, I was glad the problem was not in my code, but the fact remains that a problem in his code cost him and me valuable time.

These kind of false-positive issues will always crop up. This issue I could have resolved more quickly if I would have worked the other way around: from the way the customer used the time series, to the time series, to the code. As mentioned I was not entirely sure about the code and I started from there. Why was I not sure about the code? Did I doubt my own work? Apparently I did, even though I had several automated tests that verified the results. So I decided to develop a script that could check the totals of the fraction distribution. You can find the results of that work on the lizard-waterbalance repo on GitHub in module lizard_wbcomputation/check_fractions.py.

This script paid for its development time within a day. Since I had received the initial bug report I had made some changes to the code: fractions were not reported as percentages anymore but as real values in the range [0,1]. The script showed that my modifications were incomplete as the fractions would start in the 100.0 range... Glad I caught that.

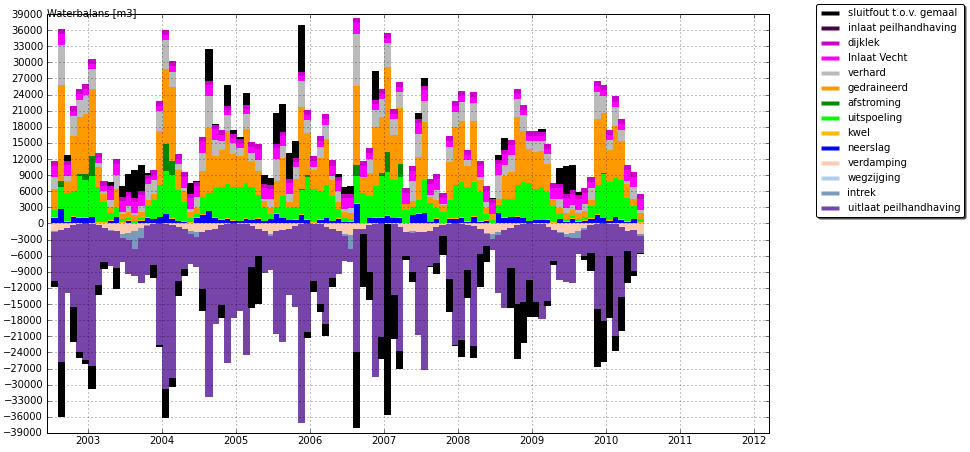

This check would spin off another check. For a water balance the sum of incoming water on a day should equal the sum of outgoing water and a delta of the water storage. The following picture depicts a water balance and its the symmetry:

Up till then the only way to check this was to inspect the water balance graph to see whether the bars above the horizontal axes would mirror the ones below it. check_fractions provided me with the infrastructure to quickly implement the symmetry check and so check_symmetry was born, see the same GitHub repo in module lizard_wbcomputation/check_symmetry.py. Again the check paid for itself almost immediately. It turned out that some input time series were always positive were I expected them to be negative.

What did I learn from this? Well, when you want to make sure an algorithm and/or its implementation is correct, you can verify that all the steps towards that outcome are correct. You can also maintain multiple test cases to guard against regressions. But never underestimate the power of automated checks of the output. It was a good lesson to be learned (and to be honest, to be learned again :)

Comments

Comments powered by Disqus